Neural Network from Scratch

A tutorial on the basics of neural networks and how to make one yourself from scratch

Today I'm going to walk you through building a simple neural network from scratch. If you're new to this, don't worry - No prior knowledge required. If this is your first step into the world of AI, welcome :) If you already know a bit about the field, I hope this document will deepen your understanding of the mechanisms behind neural networks.

Today we will be training a model to identify and classify handwritten digits using the MNIST dataset. This is a very famous dataset in machine learning. It consists of 60,000 labelled images of handwritten digits 0 to 9. We are going to train a model to correctly label digits as it sees them, with over 90% accuracy! Are you ready? Let's begin!

Firstly, What Is a Neural Network?

A neural network is exactly what is says on the tin: It's a network of neurons.

A neuron in this context, is essentially a function. It takes a numerical input, applies some operations to it, and spits out a numerical output. For a simple neuron, there are 3 parameters to consider:

-

Weights (W)

-

Bias (b)

-

Activation Function (f)

Let's look at each in turn.

Weights

The "weights" are scalar numbers we multiply our inputs by to scale them in some way. We initialise them pseudo-randomly, and the machine will learn to optimise them as it goes along (more on that later). The weights essentially emphasise the more important inputs and minimise the effect of less important ones.

Bias

The bias controls how "trigger happy" the neuron is. Changing the bias will affect how "biased" our neuron is towards high or low activation values.

Activation Function

This function "squishes" the output between a certain range, to transform our output and make it more useful for the next stage. Common activation functions between layers are sigmoid, which squishes all values between [0,1] and ReLU, which makes all values >= 0

A neural network is then just a network comprised of "layers" of these neurons. At each layer, the output from the previous layer is passed forward as input to the next layer. We can have as many layers and as many neurons per layer as our heart desires. However, having too few will make your model inaccurate and less adept at learning (imagine if you had too few neurons in your brain, you'd probably find it pretty hard to learn), while having too many will make your model more difficult and time consuming to train.

Right, now that you know what a neural network is, let's get to building one!

The Program

We will only be using 3 python libraries for this project: NumPy, Pandas, MatPlotLib. If you're not familiar with them:

-

NumPy gives us basic maths & linear algebra functionality

-

Pandas allows us to read and setup dataframes,

-

MatPlotLib allows us to graph and display our images

Data Preparation

For this project we will be using the MNIST digits dataset. This is a famous dataset in data science as it is frequently used for illustrating the basics of neural networks and machine classification, which makes it perfect for us!

The data is imported as a pandas dataframe, as pandas offers excellent data cleaning and manipulation tools. The data is separated into labels (y) and pixel values (x). The x values are normalised between [0, 1] and the y values are one-hot encoded (converted to binary indicator vectors). Finally, the data is split into a training and a test set. An 80/20 split is used here.

Neural Network Structure

This will be quite a simple network. We will have:

-

an input layer of 784 neurons (one for each pixel value)

-

an output layer of 10 neurons (one for each label)

-

two hidden layers of 32 neurons (where the magic happens).

The number of neurons in the hidden layers was chosen completely arbitrarily: I just happen to like the number 32. Feel free to mess around with different numbers of neurons to see how it affects the performance of the model.

Visualizing the Data

It's no fun training an image classification model if we don't get to see the images it's classifying! Let's take a look now at some of the images from our dataset so we can see what we're dealing with. I don't know about you, but I think I would struggle with classifying some of these!

As we mentioned before, a neural network is essentially a composition of layers of "functions", where in each neuron the activations from the previous layer are scaled by some weight and a bias is added. Then the whole output is squished through an activation function and sent off to the next layer. Before we begin training the model, we must initialise these weights, biases and activation functions:

Here I'm just initialising the weights randomly, and setting the biases to zero. There are more sophisticated initialisation methods, but they're outside the scope of our simple example

Here the activation and loss functions are also defined for later use, as well as the accuracy metric. This model will utilise ReLU and softmax activations with cross-entropy loss. I'll explain these in further detail later on in the guide. The accuracy metric simply tracks the proportion of correct guesses the model makes.

Forward Propagation

The first step in training a model is the forward propagation pass. Essentially, this is just feeding an image into the network, and having the info pass all the way through, layer by later until the end. The steps involved in this are:

-

Calculating the weighted sum of the inputs (plus bias) at each layer:

-

Feeding this into our activation function f to get the next activations:

-

Rinse and repeat until you hit the output layer, then you're done :)

This is a pretty simple mechanism for feeding the data forward. For our specific model, we will have two hidden layers using the weights, biases and activation functions that we defined above. Let's take a look more explicitly at them:

-

First Layer: Here our activation function is ReLU (Rectified Linear Unit), which scales all inputs to values

-

Second Layer: Here our activation function is softmax, which turns our output layer into a vector of probabilities, so our output layer can be read as the model's predictions of how likely it thinks this image is to be each number. We then extract its guess as the number it gave the highest probability

Here's an implementation of forward propagation as a function in python:

Backpropagation & Gradient Descent

Loss Function

How will we do this? Firstly we define a "loss function", which will quantify how good or bad our current prediction is. A high loss value means we are way off, and a low one means we are quite accurate. There are many options for loss functions, two common ones for classification tasks such as this are:

These both involve transforming our labels y into a binary or one-hot vector. It is called this as it is a vector of all zeros, except for one 1 in the entry corresponding with the correct label. (For example, the two entry for <2, 4, 6> would become <0,1,0,0,0,0>). This is very useful for us in calculating our loss functions.

Since we initialised the weights at random, our output from the forward pass is nothing more than a terrible guess. We need some way to adjust these weights and biases, to "train" the model and improve its predictions. That's where backpropagation comes in!

Backpropagation

Backpropagation is the way by which this network will actually "learn". It's an algorithm for iteratively updating the weights and biases after each forward pass, to tweak the network a bit and produce more and more accurate predictions. More technically, backpropagation is an autodifferentiation method that calculates the gradient (slope) of the loss function with respect to the weights and biases. It then updates each parameter by subtracting this gradient, scaled by a learning rate

Taking a step back, what we are essentially trying to do through backpropagation is, once we have made our guess through the forward pass, we want to see how small changes in each weight and bias would affect our output, and identify which weights and biases are the most important for us to change. Which ones will give us the most bang for our buck. We'll look at the explicit steps in a minute when we write our code for it.

Gradient Descent

Gradient descent is a method for minimising loss functions. Our objective is to be as accurate as possible, so we want our loss to be as low as possible. We do this by calculating the gradient of the cost function using backpropagation, then updating all the parameters by subtracting their corresponding gradient, scaled by a "learning rate". This learning rate affects, you guessed it, the rate at which our network learns. More specifically, it controls how big a "step" we take each time we update our parameters.

You can imagine gradient descent as a person walking down a foggy hill. The height of the hill is our loss, so we want to get as far down the hill as possible, but we can't see very far so we don't have enough information to set a straight path to the lowest valley. We have to do this iteratively, one step at a time, to update our information as we go. Gradient descent calculates the direction we should take our next step to go down the hill as efficiently as possible, and the learning rate determines how big a step we take.

Now, it's time to put backpropagation into practice. For our specific model here, the steps in backpropagation are as follows:

-

Compute the Loss: Here we pick our loss function, which will tell us how wrong our predictions are. For this model, we will use Cross Entropy as its derivative will simplify very nicely and make life easy for us later.

-

Backpropagate the Error: For each layer starting from the output layer and working back, calculate the gradient of the loss function with respect to the weights & biases for this layer, and activations from the previous layer:

-

The gradient of the loss function with respect to the output layer:

-

The gradient of the loss function with respect to the weights:

-

The gradient of the loss function with respect to the biases:

-

The error for the previous layer:

-

I will not prove or derive these equations in the interest of time, but if you are interested in where they come from, I would highly reccommend reading this fantastic explanation

-

Update the Parameters: Now that we've calculated the gradients, we can update our parameters and take a step downhill:

-

Update weights:

-

Update biases:

-

-

Rinse and Repeat: We keep doing this over and over as the model gradually improves and "learns". We can either run this algorithm for a fixed time (say 500 steps) or until our loss is below a desired threshold. However if you pick a very low threshold, it may take a long time for the model to reach it, or it in fact may never reach it, so we will be using a fixed time.

One drawback gradient descent can face is that calculating all of these gradients backwards through the entire network can be very computationally expensive, and takes ages. In this tutorial, we will be implementing a variant of gradient descent known as mini-batch gradient descent. This variant addresses this problem by randomly sampling small subsets of the neurons, and performing gradient descent on those. While this will obviously be less accurate, it is far faster and the accuracy tradeoff is really not as large as you might imagine.

I hope that clarifies the steps of backpropagation and gradient descent somewhat. Now let's see it working in code!

Training the Network

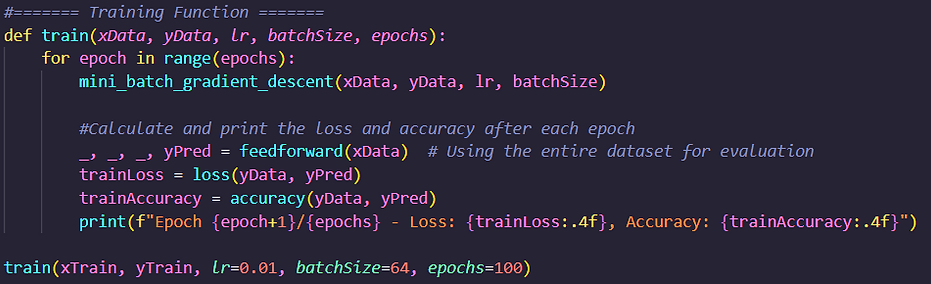

Here we define the training function. It takes the x and y data as inputs, along with the learning rate, batch size and number of epochs. The function then iterates over the epochs, and for each epoch performs minibatch gradient descent and prints the loss and accuracy.

Testing the Model

Now that we've trained the model, we need to test it using the data we put aside at the start. This is a crucial step as it allows us to gauge how well the model performs on images it has never seen before. We don't want a model that works really well on its training data but is useless on new images. That is called overfitting, and we want to avoid that as much as possible. So, let's see how well our model has learned, shall we?

Test accuracy of over 90%, our model is a success! It generalised very well from the training data and can correctly classify new, unseen images with over 90% accuracy!

Now, let's take a look at some of the numbers our model got wrong. This can give us an insight into what patterns the model may be picking up on

Looking at some of the ones our bot misclassified, I almost feel bad for it. I'm not sure I would have gotten some of those correct either!

So, that's that! We successfully built a bot from scratch that can recognise handwritten digits with over 90% accuracy! I hope you enjoyed our little experiment today, and I hope you've learned something interesting. Now you can tell all your friends you know how to build a neural network from scratch!

If you enjoyed this and thought it was cool, I highly recommend you go through the code and mess with some of the parameters to get a better feel for how it works. Perhaps try adding another layer to see if it improves the accuracy. Can you get it to 95% accuracy?

You can find the python script for this simple network on my Github if you'd like to see how it all fits together. Otherwise you can find the notebook with all this code and explanations below on my Google Colab. I encourage you to mess around with the code to get a better feel for it. The only way to truly learn something is by doing it yourself!

This project was really fun to make. I'm learning about neural networks in my degree at the moment, and this seemed like an excellent way to really test my understanding of the core concepts. I hope you enjoyed, and I look forward to more similar projects in the future :)